I try to avoid posting about AI, but today I found myself wondering: did I just chat with an AI?

Trying to decide which of several products to purchase online, I used the company’s website chatbot, which transferred me to “Vanessa” for further assistance.

Was Vanessa real? I found myself evaluating every line.

The overly-enthusiastic and strangely polished parts? That’s probably AI.

But what about…

- The natural-sounding, competent, useful responses?

- The grammatical errors?

- The inability to remember bits of context from earlier in the conversation?

- Delays where the “agent is typing” and I’m waiting…

- …but then I get an “are you still there?” a minute later while I’m typing?

- The occasional complete nonsense, sort of an on-topic word salad?

What’s wild to me is that I have experienced all of those things in the days before AI chat, when you could be reasonably certain you were talking to a human. So, on one hand, any of these could be a human behind the wheel.

As if AI chatbots had a physical incarnation with a hand

But then again, maybe AI would say: “How is you’re day so far?” If so, was the grammar error deliberately inserted, to make it sound more human? Or did AI just learn from a lot of grammatically incorrect training data?

Humans of yore

Once upon a time, you chatted (phone or text) with a human. They either knew how to help, or they would have you hold while they talked to someone else on your behalf. You could picture someone sitting in an office somewhere, spinning their office chair around to ask the senior person at the cubicle behind them.

Later, you chatted with a human who generally didn’t know how to help, but who could follow a flowchart of common questions. I worked in tech support, I get it. 90% of the calls are answered by the flowchart.

Unfortunately, those people would often not recognize when you were off the flowchart. I might say “step 3 of your instructions refers to a button that doesn’t exist,” and they’d just send me the link to the same instructions I was already following.

When they ran out of flowchart, they’d send you to tier 2. But even the flowchart person was still someone sitting in an office somewhere.

“The button doesn’t exist? That’s not on the flowchart.” Photo by Arlington Research on Unsplash

They might be following a script. But when it said “Agent is typing…” on the screen, they were at least assembling a reply from various bits of canned text with a little original text thrown in.

Where’s my person?

It was never clear to me how much “Vanessa” was controlled by a human operator. If at all.

I hope for the sake of that company that there was at least some human oversight to prevent inappropriate responses.

But, at best, I imagined someone monitoring multiple chats to make sure the AI responses weren’t going off the rails. Would they even need to know the product? Maybe their software could be doing some realtime sentiment rating to highlight if the user is getting frustrated.

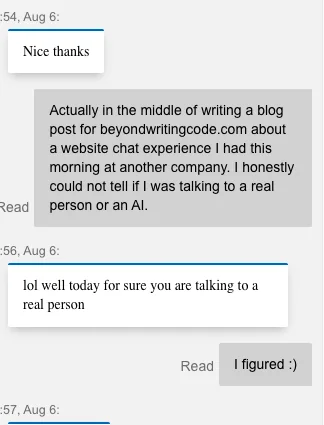

The contrast was striking when, later in the day, I had a chat with “Julian” from Microsoft’s online sales chat feature. Aside from one overly enthusiastic greeting (on the order of “It’s an absolute delight to assist you today! How can I help you make your business more successful?”) the conversation… just felt normal.

The wording and grammar weren’t perfect. But they didn’t seem unprofessional, they just seemed human.

Microsoft likely has access to the best AI a company can get. If I had to pick a company that might have AI so good they could fake me out, they’d certainly be on my list of candidates.

But I feel like Vanessa’s response to “I honestly could not tell if I was talking to a real person or an AI” might have been something more… AI-sounding.

What would an AI say if asked that, anyway?

I couldn’t get claude.ai to be deceptive about it, even hypothetically. But I did get it to tell me what a human could say to reassure. I wanted an AI-generated example to contrast with Julian’s response above.

“Ha, I get that question sometimes! I do use assistance with my writing to make sure I’m being as helpful as possible, but there’s definitely a human behind these messages.”

Well done, Claude. No need to escalate to tier 2 support.