Humans, vampires, and why you aren't Steve Yegge

Reclaiming your humanity is necessary as protection against AI vampires. Even Steve Yegge will tell you so.

Reclaiming your humanity is necessary as protection against AI vampires. Even Steve Yegge will tell you so.

How do we manage staggering amounts of information and keep learning? And is AI melting our brains?

Early morning yesterday, I was wide awake at 4:30 a.m. for no clear reason. And I had one of those 4:30 a.m. thoughts: You know, I could move my personal website off of AWS, and save some money. In fa...

A recent poll on LinkedIn from Alex Ewerlöf asked: “Would you read AI-generated posts and articles that are attached to a human name and picture?” The poll offered four choices: My first thought was: ...

How developer roles are transforming, emphasizing "people and processes" over AI technology.

AI coding practices and reader poll questions about developer experiences with AI assistance.

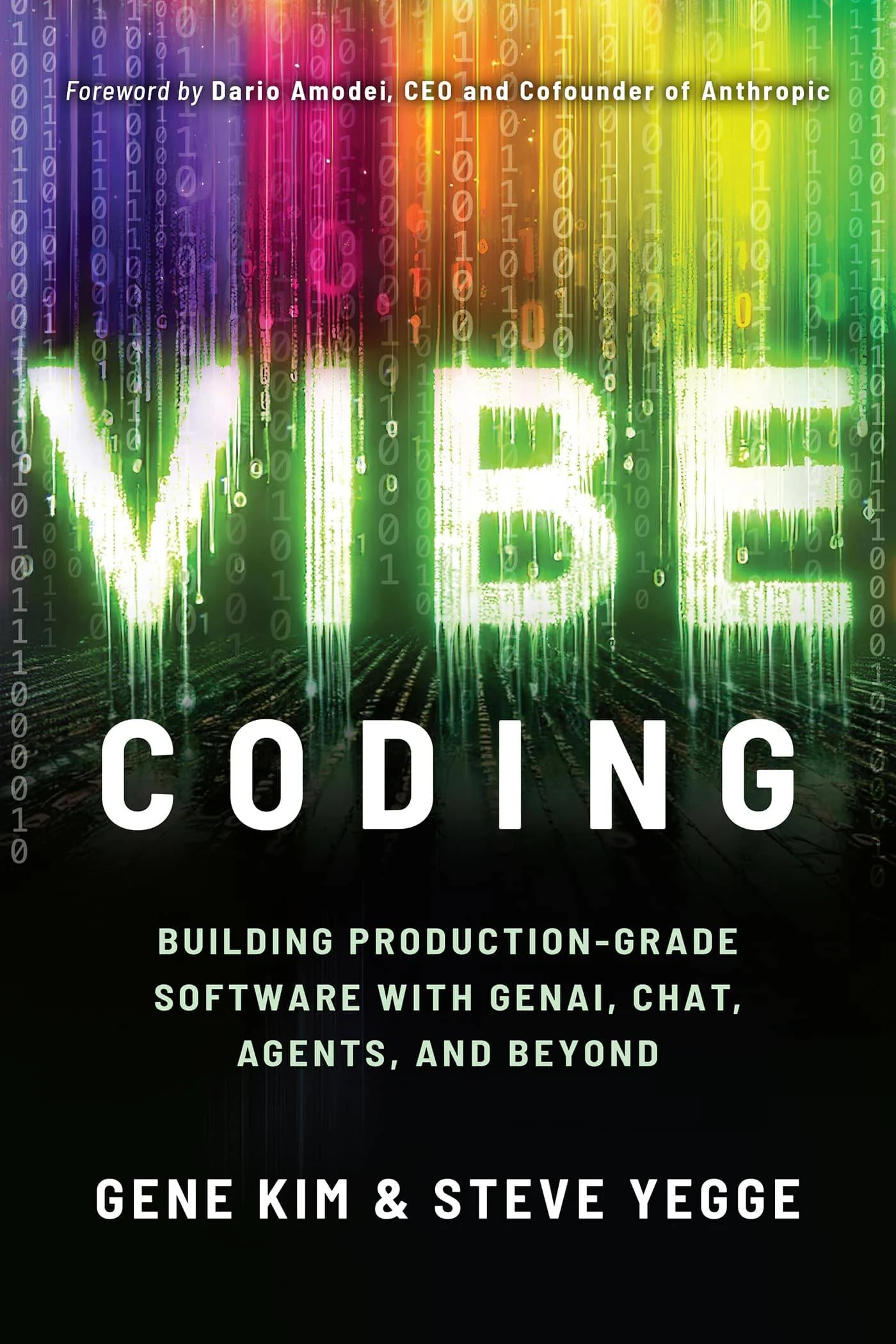

It's been a long time since I've felt I like I was hanging on every word of a book. I was so done reading about AI. Then I saw that IT Revolution was publishing a new book: Vibe Coding: Building Produ...

I try to avoid posting about AI, but today I found myself wondering: did I just chat with an AI? Trying to decide which of several products to purchase online, I used the company’s website chatbot, wh...

Another post about AI? I just made one earlier this afternooooon… But then Forrest Brazeal posted this, which got me thinking: If we treat AI like a junior developer, do we interview AI the way we int...

I’ve seen a few posts on social media with questions like: Why are we still trying to figure out exactly how much more productive developers are with GitHub Copilot when it is so cheap? Who cares? Jus...

How AI changes what developers do, with emphasis on people and processes over technology.

I once had a colleague who (jokingly) left this comment on a code review, and not in reference to a specific line of code: “Missing semicolon.”