AI and I built a website yesterday

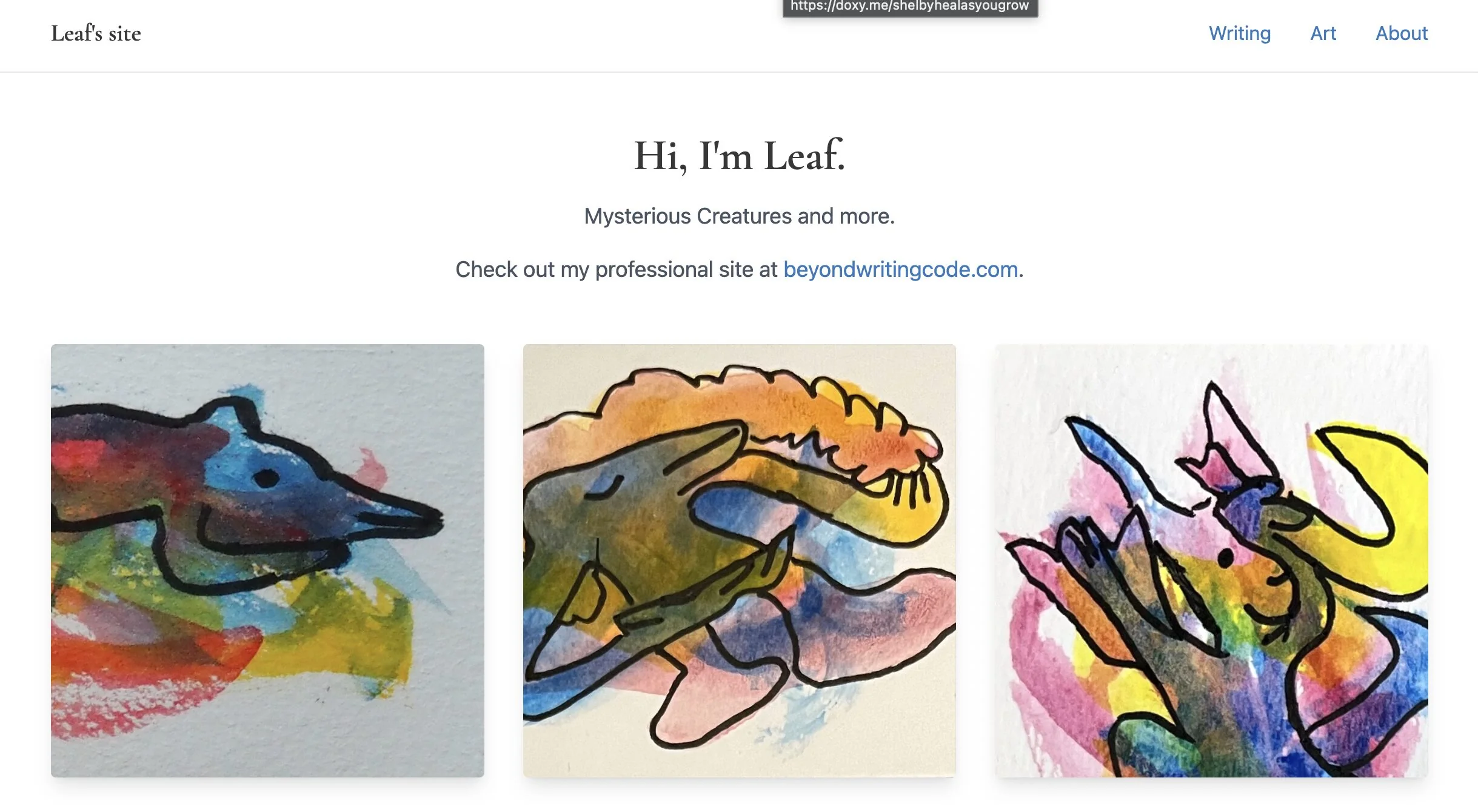

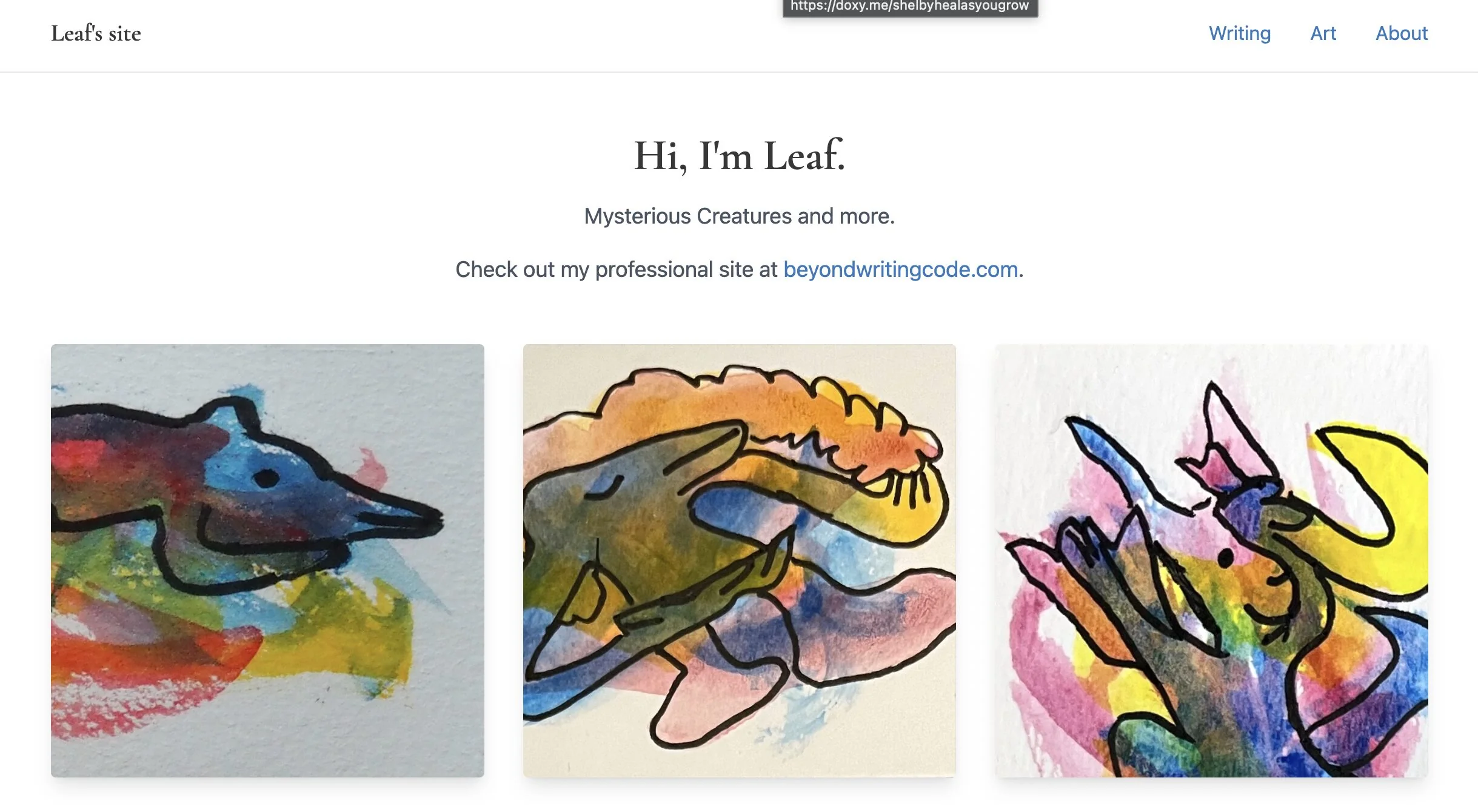

Early morning yesterday, I was wide awake at 4:30 a.m. for no clear reason. And I had one of those 4:30 a.m. thoughts: You know, I could move my personal website off of AWS, and save some money. In fa...

Early morning yesterday, I was wide awake at 4:30 a.m. for no clear reason. And I had one of those 4:30 a.m. thoughts: You know, I could move my personal website off of AWS, and save some money. In fa...